Archive

Terminal Auto Complete

Overview

Command line, or Bash completion is a Bash user’s best friend. If you had to type out everything you do on the command line, you would be doing a lot of typing. Similar to auto completion in most text fields these days, Bash completion allows you to start typing something and then have Bash complete it for you.

Basic Usage

You activate completion with the TAB key.

So lets say you wanted to change into a directory named books, and there were 3 directories available, namely books, movies and music. If you were to type

$ cd b

and then press the TAB key, it would end up as

$ cd books

Though, if there was another directory next to books that also starts with bo, for examples boxes, then Bash would only complete up till what they have in common, which is bo. You can then press the TAB key again to have it show you the available options, as so:

$ cd bo books/ boxes/

From here you can just type one more character to distinguish them and then press TAB again to have it complete the whole word. So you would type o and press TAB, and your command will be completed.

You get used to this very quickly, and it becomes a very powerful tool.

Smart Completion

The basic completion of files and directories are the default. You get smart completion which is command specific. These are done based on what you have typed so far and computed with procedures. For example, if you type:

$ unzip

and then press the TAB key, it could complete only ZIP files, instead of suggesting all available file.

It doesn’t stop there, though. Non-file completions are also possible. For example the apt-get command has various sub commands like install, remove, source and upgrade. All it’s sub commands are also completed. For example, typing

$ apt

and pressing the TAB key twice, gives you

$ apt-get autoclean autoremove build-dep check clean dist-upgrade dselect-upgrade install purge remove source update upgrade

You can now start typing one of them and press TAB to have it complete. For example, typing i and pressing TAB will complete into install.

Customizing

It gets even better. All this is very easily customized. If I wanted to have the perl command be completed only with files ending in the .pl extension, I can achieve this by creating a file at /etc/bash_completion.d/perl and giving it the following contents:

complete -f -X '!*.pl' perl

That’s it. Whenever I complete the arguments of the perl command, it will only do so for files ending in .pl.

Other Shortcuts

There are some other shortcuts also related to command line editing and history.

You can select your previous command by pressing the Up key. Repeatedly pressing this will allow you to browse through your history of commands. Pressing the Down will make you go forward into your history.

Or, if you start typing a command and realize your missed something and want to clear the whole line to start over, you can press Alt+R. This is like pressing and holding backspace until the line is cleared. An alternative is to just press Ctrl+C and return to the command line.

Sometimes you want to perform a series of commands on the same file. For example, to change the ownership and permissions of the same file you could type:

chown root:root myfile chmod 0644 myfile

This requires typing the filename twice. Bash will do this for you on the second line if you press Alt+. (Alt and the full stop character). For example, typing:

chown root:root myfile chmod 0644

and then pressing Alt+., will complete your command by taking the last argument of the previous command (ie. myfile) and inserting it at your current position, resulting in:

chmod 0644 myfile

Conclusion

So Why Love Linux? Because the command line, one of Linux’s true powers, offers powerful editing capabilities.

Custom Boot Screen

The Look and Feel

When I installed a new Ubuntu version, they changed the look of the boot loader screen into a plain terminal-like look and feel. I liked the high resolution look it had before and was determined to bring a similar look into my new installation.

After some investigation I found how to set a background for Grub and configured one I liked. This was all good, except the borders and text for Grub didn’t quite fit in with my background image. Grub, for instance, had it’s name and version at the top of the screen, which you can’t change or turn off. And the borders/background for selecting boot options wasn’t configurable except for changing between a handful of colors.

I was already busy with customization and figured I’d go all out and make it look just the way I wanted it. Using Ubuntu (or Debian) makes this all easy, as I’m able to get the source, build it and package into a .deb ready for installation using only 2 commands. So I started hacking at the source, and eventually came up with something I liked even more than what I had before.

The List

Further, the actual list of items displayed in the boot screen is generated through a script, which detects all the kernels and supported bootable partitions. I also modified these scripts to make sure the list it generates and the list item it selects to be the default is what I wanted it to be.

I added support for a configuration entry like this:

GRUB_DEFAULT=image:vmlinuz-2.6.32-21-generic

So when anything is installed which triggers the Grub script to run, or I manually run it, this option will instruct the script to use the specified Linux image as the default option. It will also support a value of “Windows”, which when set will make the first Windows partition found the default boot option.

Further I added functionality, that if my default was configured as a Linux image I also made the script create an extra entry with crash dump support enabled. For all other Linux images it would just generate the standard and recovery mode entries.

Finally, all Windows and other operating system options would be generated and appended to the end of the list.

Conclusion

So Why Love Linux? Because your imagination sets the boundaries of what is possible. It’s possible and easy to make your computer do exactly what you want it to do.

All Configuration Files are Plain Text

Plain Text Configs?

Almost all of the configuration files in a Linux system are text files under /etc. You get the odd application that stores it’s config in a binary format like a SQL database, specialized or some other proprietary format.

The benefit of text, however, is the ease of accessing it. You don’t need to implement an API, which if it’s your first implementation can set you back quite some time. And if you only need 1 or 2 entries from it, could end up as a huge expense. Having the configuration available as plain text you can immediately access it with the myriad of stream processors you have at your disposal, like grep, sed, awk, cut, cat, sort to name a few, or inside programming languages any of the file operations and methods.

To give an example, lets say I wanted to know the PIDs of all the users on the system. I didn’t need their names, just the PIDs, it’s as simple as this (assuming you don’t use something like NIS):

awk -F ':' '{print $3}' /etc/passwd

Or if you wanted to get a list of all hostnames a specified IP is associated with in /etc/hosts:

grep "127.0.0.1" hosts | cut -f 2- -d ' '

A very useful one is where your configuration file’s entries take the format of

RUN_DAEMON="true" INTERFACE="127.0.0.1" PORT="7634"

In this case you can easily extract the values from the file. For example, if the configuration file is named /etc/default/hddtemp, and you want to extract the PORT option, you simply need to do:

value=$( . "/etc/default/hddtemp"; echo $PORT ) echo "PORT has value: $value"

Or for the same file, if you want to extract those options into your current environment, it’s as simple as doing:

. "/etc/default/hddtemp" echo "PORT has value: $PORT" echo "INTERFACE has value: $INTERFACE"

What the above does is evaluate the configuration file as a bash script, which then basically sets those configuration options as variables (since they use the same syntax as bash for setting variables). The difference between the first and the second method, is that the second method could potentially override variables you’re already using in your environment/script (imagine already using a variable named PORT at the time of loading the configuration file). The first method loads the configuration in it’s own environment and only returns the value you requested.

Note that when using these 2 methods, had there been commands in the configuration files they would have been executed. So be aware of this if you decide to use this technique to read your configuration files. It’s very useful, but unless you’re careful you could end up with security problems.

Conclusion

The mere fact that these files are text means you immediately have access to it using methods available to even the most primitive scripting/programming langauges, and all existing text capable commands and programs are able to view/edit your configuration files.

Something like the Windows registry surely has it’s benefits. But in the world of Unix pipes/fifos, having it all text based is a definite plus.

So Why Love Linux? Because it has mostly plain text configuration files.

CrossOver Office

Introduction

I have to admit, this is an odd post. It explains a reason to love Linux, being that it runs CrossOver Office, which allows you to run Windows software on Linux with little problems.

Either way, this says it all. Sometimes you just need to do it. I, personally, feel that Microsoft Office far exceeds the quality of OpenOffice. OpenOffice has a long way to go before it’s as easy to use and powerful as Microsoft Office is. So on all my Linux installations I run Office 2007 inside CrossOver Office.

How does CrossOver Office differ from Wine?

CrossOver Office is based on Wine. Though, being a commercial program that you can only use if you pay for it, there is funding to support certain programs’ compatibility. Wine works very well, though there are many visual glitches and bugs that make running certain programs a headache. And unless you’re a Wine expert you can’t always make these glitches go away.

The team at CrossOver office take popular programs and make compatibility fixes to ensure they run close to, if not as well as they do on Windows, making available this quality support to the general public.

I feel it’s worth every cent and recommend it to everyone installing Linux. Support the hard working team at CodeWeavers today!

Screenshots

Here are some screenshots of my CrossOver installation. Just see how well these programs run on Linux:

Conclusion

So Why Love Linux? Because CrossOver office helps to bring the best of both worlds onto your desktop.

Easily Create In-Memory Directory

In Memory

If you have ever had to run a task which performs a lot of on-disk file operations you know how much of a bottleneck the hard drive could be. It’s just not fast enough to keep up with the demands of the program running in the CPU.

So what I usually do when I need to run some very disk intensive tasks is to move it all to an in-memory filesystem. Linux comes with a module called tmpfs, which allows you to mount such a filesystem.

So, assuming my file operations would be happening at /opt/work. What I would do is

- Move /opt/work out of the way, to /opt/work.tmp

- Make a new directory called /opt/work

- Mount the in-memory filesystem at /opt/work

sudo mount -t tmpfs none /opt/work - Copy the contents of /opt/work.tmp into /opt/work.

- Start the program.

- Then when the program completes I would copy everything back, unmount the in-memory filesystem and clean up.

Now, since the directory where all the operations will be happening isn’t actually on disk (but in memory), it can happen without any hard drive bottleneck.

Here are some benchmark results showing how much faster this is. I was writing 1GB of data to each filesystem. Specifically look at the last bit of the last line.

For the memory filesystem:

quintin@quintin-VAIO:/dev/shm$ dd if=/dev/zero of=test bs=$((1024 * 1024)) count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 0.984447 s, 1.1 GB/s

And for the hard drive filesystem:

quintin@quintin-VAIO:/tmp$ dd if=/dev/zero of=test bs=$((1024 * 1024)) count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 24.2657 s, 44.2 MB/s

As you can see, the memory filesystem was more than 24 times faster than the hard drive filesystem. I was able to write at only 44.2MB/s on the hard drive, but 1.1 GB/s on the memory filesystem. Note that this isn’t it’s maximum, since I had some other bottlenecks here. If you were to optimize this you would be able to write even faster to the memory filesystem. The fact remains that filesystem intensive tasks can run much faster when done this way.

There are some risks involved, in that loosing power to the system will cause everything that was in the memory filesystem to be lost. Keep this in mind when using it. In other words, don’t store any critical/important data only in an in-memory filesystem.

The Core of it All

So in the end it all comes down the the fact that you can easily create a in-memory filesystem. All you need to do to achieve this is decide on a directory you want to be in-memory, and mount it as such.

For example, if we were to choose /home/mem as an in-memory directory, we can mount it as follows:

sudo mount -t tmpfs none /home/mem

If we need it to be persistently mounted as such (across system boots), we can add the following line to /etc/fstab:

none /home/mem tmpfs defaults 0 0

Conclusion

So Why Love Linux? Because with a single command you can get a temporary in-memory filesystem running to help you speed up a filesystem intensive task.

Bootable Computer Doctor

The Story

A live distribution is a Linux distribution you can run from a pen drive or CDROM drive, without having to first install it onto your hard drive. This is where the name “live” comes from, as it can be run “live without installation”.

Many times I use some Live CD to run diagnostics, recover a corrupted boot sector, reset the password for a Windows/Linux machine and many other similar tasks provided by live distributions.

You get very useful Live distributions these days. They are most often configured for either CD/DVD or USB pendrive, though some of the smarter ones can use the same image to boot from either. Beyond this, when you boot from them you will (mostly) get a syslinux bootloader screen from which you select what you want to boot.

Here are a few very useful example live distros that I use:

- SystemRescueCd

- Ultimate Boot CD

- Trinity Rescue Kit

- Puppy Linux

- DamnSmallLinux

- Pentoo Linux

So if I just wanted to reset the password for a Windows machine, I would burn a CD from the Ultimate Boot CD image and boot from it. In then has an option for booting ntpasswd, which allows editing of the Windows registry and resetting an account’s password.

In another scenario if I just wanted to reinstall Grub, my Linux bootloader, I would boot DamnSmallLinux to get a terminal from which I can reconfigure my boot loader.

The problem is that depending on what I need to do I might need a different Live distribution every time. I used to carry CDs for each of these, but I would forget a CD in the drive, and sometimes I don’t have my CDs with me, or the machine doesn’t have a CDROM drive. The ideal situation would be to have all of this on a USB pen drive which I carry with me all the time anyway.

So after investigating this, it turned out that in themselves the distros don’t like sharing their boot drive with others. Some of them didn’t even support booting from Pen drives (only CDROMS).

Open Source DIY

Being open source all these problems are just hurdles to overcome. Nothing more.

I downloaded all of them, and one by one extracted their images into a directory structure of my choice. To ensure they don’t polute the root directory of my pen drive I put them all into a syslinux subdirectory. This alone will break some of them since these want their files in the root of the filesystem. Again, just something to hack out.

After this I made my own syslinux bootloader configuration, which has a main menu with an item for each of the distros. If you select one of these items, it’s original menu will be loaded. I also modified their menus to create an item that will return back to my main menu.

On top of the structure of the menus I had to modify the actual boot loader configurations to support my new directory structure as well.

Further, to speed up the development of this I made a mirror image of the whole pen drive into a file, and then used this for developing my distro collection. For testing I would boot the image with QEMU. All this allowed many extra abilities like to back it up, boot multiple instances and easily revert to previous versions.

Once I had all of the boot menus and boot loaders configured correctly, all that was left was to actually test all the images and make sure they loaded correctly. This required making some more modifications of the distro’s own init scripts (since they either didn’t support pen drives or because I change the directory structure they were expecting)

Zeta, the conclusion

In the end I had a bootable pen drive with 6 different live distributions on it, some with both 32bit and 64bit versions available.

I named it Zeta, the sixth letter of the Greek alphabet – which has a value of 7. The intention was to add a 7th distribution. I left open some space for it and will be adding it in the near future (as soon as I’ve decided on which one to add). In the meantime there are 6 other very useful distributions.

Drop me a message if you want the image and instructions for installing it onto your own pendrive.

Here are a few screen shots of my resulting work:

So Why Love Linux? Because within hours I was able to make a “computer doctor” pendrive distribution.

Almost Everything is Represented by a File

Device Files

Other than your standard files for documents, executables, music, databases, configuration and what not, Unix based operating systems have file representations of many other types. For example all devices/drivers have what appears to be standard files.

In a system with a single SATA hard drive, the drive might be represented by the file /dev/sda. All it’s partitions will be enumerations of this same file with the number of the partition added as a suffix, for example the first three primary partitions will be /dev/sda1, /dev/sda2 and /dev/sda3.

When accessing these files you are effectively accessing the raw data on the drive.

If you have a CDROM drive, there will be a device file for it as well. Most of the time a symlink will be created to this device file under /dev/cdrom, to make access to the drive more generic. Same goes for /dev/dvd, /dev/dvdrw and /dev/cdrw the last 2 being for writing to DVDs or CDs. In my case, the actual cd/dvdrom device is /dev/sr0, which is where all of these links will point to. If I wanted to create an ISO image of the CDROM in the drive, I would simply need to mirror whatever data is available in the “file” at /dev/cdrom, since that is all an ISO image really is (a mirror of the data on the CDROM disc).

So to create this ISO, I can run the following command:

dd if=/dev/cdrom of=mycd.iso

This command will read every byte of data from the CDROM disc via the device file at /dev/cdrom, and write it into the filesystem file mycd.iso in the current directory.

When it’s done I can mount the ISO image as follows:

mkdir /tmp/isomount ; sudo mount -o loop mycd.iso /tmp/isomount

Proc Filesystems

On all Linux distributions you will find a directory /proc, which is a virtual filesystem created and managed by the procfs filesystem driver. Some kernel modules and drivers also expose some virtual files via the proc filesystem. The purpose of it all is to create an interface into parts of these modules/drivers without having to use a complicated API. This allows scripts and programs to access it with little effort.

For instance, all running processes have a directory named after it’s PID in /proc. These can be identified by all the numbers between 1 and 65535 in the /proc directory. To see this in action, we execute the ps command and select an arbitrary process. For this example we’ll pick the process with PID 16623, which looks like:

16623 ? Sl 1:55 /usr/lib/chromium-browser/chromium-browser

So, when listing the contents of the directory at /proc/16623 we see many virtual files.

quintin@quintin-VAIO:~$ cd /proc/16623

quintin@quintin-VAIO:/proc/16623$ ls -l

total 0

dr-xr-xr-x 2 quintin quintin 0 2011-05-21 19:48 attr

-r-------- 1 quintin quintin 0 2011-05-21 19:48 auxv

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 cgroup

--w------- 1 quintin quintin 0 2011-05-21 19:48 clear_refs

-r--r--r-- 1 quintin quintin 0 2011-05-21 18:05 cmdline

-rw-r--r-- 1 quintin quintin 0 2011-05-21 19:48 coredump_filter

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 cpuset

lrwxrwxrwx 1 quintin quintin 0 2011-05-21 19:48 cwd -> /home/quintin

-r-------- 1 quintin quintin 0 2011-05-21 19:48 environ

lrwxrwxrwx 1 quintin quintin 0 2011-05-21 19:48 exe -> /usr/lib/chromium-browser/chromium-browser

dr-x------ 2 quintin quintin 0 2011-05-21 16:30 fd

dr-x------ 2 quintin quintin 0 2011-05-21 19:48 fdinfo

-r--r--r-- 1 quintin quintin 0 2011-05-21 16:36 io

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 latency

-r-------- 1 quintin quintin 0 2011-05-21 19:48 limits

-rw-r--r-- 1 quintin quintin 0 2011-05-21 19:48 loginuid

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 maps

-rw------- 1 quintin quintin 0 2011-05-21 19:48 mem

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 mountinfo

-r--r--r-- 1 quintin quintin 0 2011-05-21 16:30 mounts

-r-------- 1 quintin quintin 0 2011-05-21 19:48 mountstats

dr-xr-xr-x 6 quintin quintin 0 2011-05-21 19:48 net

-rw-r--r-- 1 quintin quintin 0 2011-05-21 19:48 oom_adj

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 oom_score

-r-------- 1 quintin quintin 0 2011-05-21 19:48 pagemap

-r-------- 1 quintin quintin 0 2011-05-21 19:48 personality

lrwxrwxrwx 1 quintin quintin 0 2011-05-21 19:48 root -> /

-rw-r--r-- 1 quintin quintin 0 2011-05-21 19:48 sched

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 schedstat

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 sessionid

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 smaps

-r-------- 1 quintin quintin 0 2011-05-21 19:48 stack

-r--r--r-- 1 quintin quintin 0 2011-05-21 16:30 stat

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 statm

-r--r--r-- 1 quintin quintin 0 2011-05-21 18:05 status

-r-------- 1 quintin quintin 0 2011-05-21 19:48 syscall

dr-xr-xr-x 22 quintin quintin 0 2011-05-21 19:48 task

-r--r--r-- 1 quintin quintin 0 2011-05-21 19:48 wchan

From this file listing I can immediately see the owner of the process is the user quintin, since all the files are owned by this user.

I can also determine that the executable being run is /usr/lib/chromium-browser/chromium-browser since that is what the exe symlink points to.

If I wanted to see the command line, I can view the contents of the cmdline file, for example:

quintin@quintin-VAIO:/proc/16623$ cat cmdline /usr/lib/chromium-browser/chromium-browser --enable-extension-timeline-api

More Proc Magic

To give another example of /proc files, see the netstat command. If I wanted to see all open IPv4 TCP sockets, I would run:

netstat -antp4

Though, if I am writing a program that needs this information, I can read the raw data from the file at /proc/net/tcp.

What if I need to get details on the system’s CPU and it’s capabilities? I can read /proc/cpuinfo.

Or if you need the load average information in a script/program you can read it from /proc/loadavg. This same file also contains the PID counter’s value.

Those who have messed around with Linux networking, would probably recognize the sysctl command. This allows you to view and set some kernel parameters. All of these parameters are also accessible via the proc filesystem. For example, if you want to view the state of IPv4 forwarding, you can do it with the sysctl as follows:

sysctl net.ipv4.ip_forward

Alternatively, you can read the contents of the file at /proc/sys/net/ipv4/ip_forward:

cat /proc/sys/net/ipv4/ip_forward

Sys Filesystem

Similar to the proc filesystem is the sys filesystem. It is much more organized, and as I understand it intended to supersede /proc and hopefully one day replace it completely. Though for the time being we have both.

So being very much the same, I’ll just give some interesting examples found in /sys.

To read the MAC address of your eth0 network device, see /sys/class/net/eth0/address.

To read the size of the second partition of your /dev/sda block device, see /sys/class/block/sda2/size.

All devices plugged into the system have a directory somewhere in /sys/class, for example my Logitech wireless mouse is at /sys/class/input/mouse1/device. As can be seen with this command:

quintin@quintin-VAIO:~$ cat /sys/class/input/mouse1/device/name Logitech USB Receiver

Network Socket Files

This is mostly disabled in modern distributions, though remains a very cool virtual file example. These are virtual files for network connections.

You can for instance pipe or redirect some data into /dev/tcp/10.0.0.1/80, which would then establish a connection to 10.0.0.1 on port 80, and transmit via this socket the data written to the file. This could be used to give basic networking capabilities to languages that don’t have it, like Bash scripts.

The same goes for UDP sockets via /dev/udp.

Standard IN, OUT and ERROR

Even the widely known STDIN, STDOUT and STDERR streams, most probably available in every operating system and programming language there ever was, is represented by files in Linux. If you for instance wanted to write data to STDERR, you can simply open the file /dev/stderr, and write to it. Here is an example:

echo I is error 2>/tmp/err.out >/dev/stderr

After running this you will see the file /tmp/err.out containing “I is error”, proving that having written the message to /dev/stderr, resulted in it going to the STDERR stream.

Same goes for reading from /dev/stdin or writing to /dev/stdout.

Conclusion

So Why Love Linux? Because the file representation for almost everything makes interacting with many parts of the system much easier. The alternative for many of these would have been to implement some complicated API.

Pipe a Hard Drive Through SSH

Introduction

So, assume you’re trying to clone a hard drive, byte for byte. Maybe you just want to backup a drive before it fails, or you want to do some filesystem data recovery on it. The point is you need to mirror the whole drive. But what if you can’t install a second destination drive into the machine, like in the case for most laptops. Or maybe you just want to do something funky?

What you can do is install the destination drive into another machine and mirror it over the network. If both these machines have Linux installed then you don’t need tany extra software. Otherwise you can just boot from a Live CD Linux distribution to do the following.

Setup

We’ll assume the source hard drive on the client machine is /dev/sda, and the destination hard drive on the server machine is /dev/sdb. We’ll be mirroring from /dev/sda on the client to /dev/sdb on the server.

An SSH server instance is also installed and running on the server machine and we’ll assume you have root access on both machines.

Finally, for simplicity in these examples we’ll name the server machine server-host.

The Command

So once everything is setup, all you need to do is run the following on the client PC:

dd if=/dev/sda | ssh server-host "dd of=/dev/sdb"

And that’s it. All data will be

- read from /dev/sda,

- piped into SSH, which will in turn

- pipe it into the dd command on the other end, which will

- write it into /dev/sdb.

You can tweak the block sizes and copy rates with dd parameters to improve performance, though this is the minimum you need to get it done.

Conclusion

So Why Love Linux? Because it’s Pipes and FIFOs design is very powerful.

Tunneling with SSH

Tunneling

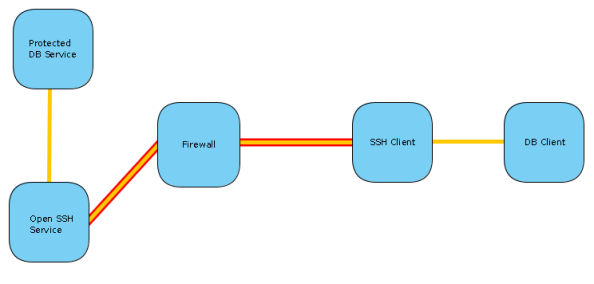

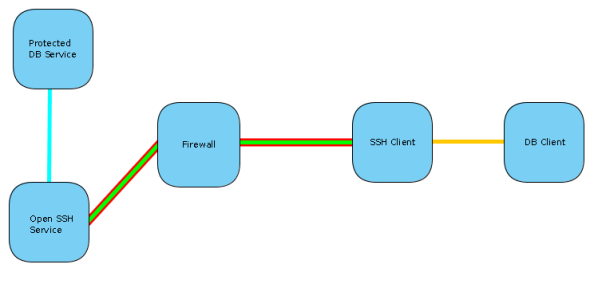

Assume you have a database service, protected from any external traffic by a firewall. In order for you to connect to this service you need to first get past the firewall, and this is where the tunnel comes in. The firewall is configured to allow SSH traffic to another host inside the network, and it’s via the SSH service that the tunnel will be created.

So you would have an SSH client connect to the SSH server and establish a tunnel. Then any traffic from the database client will be intercepted and sent via the tunnel. Once it reaches the the host behind the firewall, the traffic will be transmitted to the database server and the connection will be established. Now any data sent/received will be traveling along the tunnel and the database server/client can communicate with one another as if there is no firewall.

This tunnel is very similar to an IPSec tunnel, except not as reliable. If you need a permanent tunnel to be used to protect production service traffic, I would rather use an IPSec tunnel for these. This is because failed connections between the tunnel ends are not automatically reestablished with SSH as it is with IPSec. IPSec is also supported by most firewall and router devices.

TCP Forwarding

TCP Forwarding is a form of, and very similar to tunneling. Except in the tunneling described above the DB client will establish a connection as if directly trying to connect to the database server, and the traffic will be routed via the tunnel. With TCP forwarding the connection will be established to the SSH client, which is listening on a configured port. Once this connection is established the traffic will be sent to the SSH server and a preconfigured connection established to the database from this side.

So where tunneling can use multiple ports and destination configured on a single tunnel, a separate TCP forwarding tunnel needs to be established for each host/port pair. This is because the whole connection is preconfigured. You have a static port on the client side listening for traffic. Any connection established with this port will be directed to a static host/port on the server side.

TCP forwarding is the easiest to setup and most supported method in third party clients of any of the SSH tunneling methods.

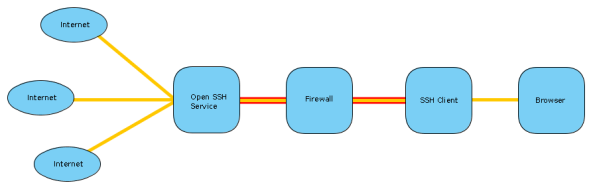

SOCKS Proxy

This is a very exciting and useful tunneling method in OpenSSH. It can be seen as dynamic TCP forwarding for any client supporting SOCKS proxies.

So what you would do is configure the SSH client to create a SOCKS proxy on a specified local port. Then your browser is configured to use this port as a SOCKS proxy. Any web sites you will be visiting will be tunneled via the SSH connection and appear to be coming from the server the SSH service is hosted on.

This allows you to get past filtering proxies. So if your network admin filters certain sites like Facebook, but you have SSH access to a network where such filtering doesn’t happen, you can connect to this unfiltered network via SSH and establish a SOCKS proxy. Using this tunnel you can freely browse the internet.

Why is this useful?

Since SSH traffic is encrypted, all of these methods can be used to protected against sniffers on the internet and in your local network. The only parts that are not encrypted would be between you and the SSH client (very often configured on your own PC) and the part between the SSH server and the destination (very often a LAN). So if you have software using a network protocol that doesn’t support encryption by itself, or which has a very weak authentication/encryption method, an encrypted tunnel can be used to protect it’s traffic.

Also, if you need to get past firewalls for certain services, and you already have SSH access to another host who is allowed past the firewall for these services, you can get the desired access by tunneling via the SSH host.

Depending on your tunnel configuration, you can hide your identify. This is especially true for SOCKS proxy and TCP forwarding tunnels (but also possible with standard tunnels). The source of the connection will always appear to be the server end establishing the tunneled connection.

Avoiding being shaped. SSH traffic is many times configured to be high priority/low latency traffic. By tunneling via SSH you basically benefit from this, because the party shaping the traffic cannot distinguish between tunneled SSH traffic and terminal SSH traffic.

There are many more use cases for this extremely powerful technology.

So Why Love Linux? Because it comes with OpenSSH installed by default.

Playing Ping Pong with ARP

ARP

Let me start of by giving a very rough explanation of how devices communicate on ethernet and WiFi networks. Each network device has a unique hardware address called a MAC address. These are assigned by manufacturers and don’t change for the life of the device. Many manufacturers even place the address on a sticker on the chip. It is possible to change the address with software, though the idea is that the address remain static so the device can communicate on a physical network.

So what happens when you want to connect to a machine on your local network? Assume your IP is IP-A and your destination is IP-B. Your computer will first do what is called an “ARP who-has” broadcast, asking everyone on the network to identify themselves if they are the owner of IP-B. The owner of IP-B will then respond to you saying, “I am IP B, and I’m at this MAC address”. After this response both machines know at which physical address each other is, and are able to send and receive data to and from each other.

Now, the protocol for discovering the MACs as I described above is called ARP, the Address Resolution Protocol. You can get a listing of the known MAC addresses of devices you’ve been communicating with on your LAN by running the following command:

arp -an

Ping

So, everyone probably knows the ping command. It’s a command that sends a packet to another machine requesting a response packet. It’s often used to test if a machine is up, whether an IP is in use, to measure latency or packet loss, and so on. It’s very simple to use. You simply run ping <ip address> and on Linux it will then continuously send ping or echo requests and display any responses. When you abort the command with Ctrl+C you will also get a summary of the session, which includes the numbers of packets sent, the packet loss percentage, the elapsed time and some other metrics.

Though because of security concerns many people disable ping and it’s not always possible to use it for a quick test to see if a host is up and behind a certain IP address. Sometimes I just need a temporary IP on a specific subnet, and ping alone isn’t enough to quickly determine if an IP is currently claimed.

This is where arping comes in. arping is a very handy utility that does basically the same as ping, except with arp who-has packets. When you run it against a given IP address, it will send arp who-has packets onto the network, and print the responses received.

Here is some example output of arping:

[quintin@printfw ~]$ sudo arping 10.0.1.99

ARPING 10.0.1.99 from 10.0.1.253 eth0

Unicast reply from 10.0.1.99 [7B:F1:A8:11:84:C9] 0.906ms

Unicast reply from 10.0.1.99 [7B:F1:A8:11:84:C9] 0.668ms

Sent 2 probes (1 broadcast(s))

Received 2 response(s)

What’s the Point

This is useful in many cases.

- Ping is not always available, as some system firewalls actively block it, even to other hosts on it’s LAN. In there cases you can still do a hosts-up test.

- You can do it to quickly discover the MAC address behind a given IP.

- If you have an IP conflict you can get the MAC addresses of all the hosts claiming the given IP address.

- It’s a quick way to see if a host is completely crashed. If it doesn’t respond to ARP it’s very dead.

- You can ping hosts even if you’re not on the same subnet.

I’m sure one can find many more uses of arping. I think it’s a very useful utility.

Windows Firewall Oddities

On a side note I thought might be interesting. I have noticed some people with the AVG Anti-Virus package’s firewall to not respond to ARP requests all the time. I haven’t investigated it further, though it seems like it will prevent sending responses in certain scenarios. This is definitely a feature that I would prefer didn’t exist, though am sure there are benefits to it, like being in complete stealth on a LAN. When I find out more about this, I’ll update this page.

Conclusion

So Why Love Linux? Because it comes preinstalled with and has available to it tons of ultra useful utilities and programs.