Archive

Belkin F5D8053 Support in Ubuntu – Drivers from Nowhere

New Hardware

I recently purchased a new wireless ADSL router, which came with a USB WiFi network adapter. Being a fairly new release of the hardware the kernel didn’t recognize it and I couldn’t use it.

However, after some research I found that it is in deed supported by the kernel – but just not recognized due to a change in the hardware ID reported by the device. The kernel module it should work with is the RT2870 driver.

If this is the case, it should be easy to fix.

Making it Work

If you have recently rebuilt your kernel you can skip section (A), and simply proceed with (B) and (C).

A. Preparing Build Environment

The steps for preparing the build environment is based on those needed for Ubuntu 10.04 (Lucid Lynx). If you have problems building the kernel after following these steps (especially for older versions of Ubuntu), see Kernel/Compile for instructions more tailored to your version of Ubuntu.

- Prepare your environment for building

sudo apt-get install fakeroot build-essential sudo apt-get install crash kexec-tools makedumpfile kernel-wedge sudo apt-get build-dep linux sudo apt-get install git-core libncurses5 libncurses5-dev libelf-dev sudo apt-get install asciidoc binutils-dev

- Create and change into a directory where you want to build the kernel

- Then download the kernel source:

sudo apt-get build-dep --no-install-recommends linux-image-$(uname -r) apt-get source linux-image-$(uname -r)

- A new directory will be created containing the kernel source code. Everything else should happen from inside this directory

B. Modifying the Source

- Run lsusb and look for your Belkin device. It should look something like this

Bus 002 Device 010: ID 050d:815f Belkin Components

- Note the hardware identifier, which in the above example is 050d:815f. Save this value for later.

- Inside the kernel source, edit the file drivers/staging/rt2870/2870_main_dev.c

- Search for the string Belkin. You should find a few lines looking like this:

{ USB_DEVICE(0x050D, 0x8053) }, /* Belkin */ { USB_DEVICE(0x050D, 0x815C) }, /* Belkin */ { USB_DEVICE(0x050D, 0x825a) }, /* Belkin */ - Duplicate one of these lines and replace in the ID noted in (3) above. Split and adapt the format of the 2 parts on either sides of the colon to conform to the syntax used in the lines from (4). In my example the 050D:815F would end up with a line as follows:

{ USB_DEVICE(0x050D, 0x815F) }, /* Belkin */ - Save the file and proceed to (C)

C. Rebuilding and Reinstalling the Kernel

- Run the following command in the kernel source directory:

AUTOBUILD=1 NOEXTRAS=1 fakeroot debian/rules binary-generic

- This command will take a few minutes to complete. When it’s done you’ll find the kernel image .deb files one level up from the kernel source directory.

- To update your kernel image, you can install these using dpkg. For example:

sudo dpkg -i linux-image-2.6.32-21-generic_2.6.32-21.32_i386.deb

- After installing, reboot your system and the device should be working.

Conclusion

Hardware manufacturers change the device IDs for various reasons, mainly when changes were made to the device that requires distinguishing it from previous releases (for example new functionality added to an existing model). A driver will always have a list of devices with which is it compatible. Sometimes the changes made by the manufacturer doesn’t really change the interface to the device, which means previous drivers would work perfectly fine had they known they are able to control the specified device.

The change above was exactly such a case where the driver is able to work with the device, but doesn’t know it supports this exact model. So all we did was to find the hardware ID actually reported by the device and add this to the list of IDs the driver will recognize and accept.

This is a great thing about Linux and the common Linux distributions. Almost all (if not all) packages you’ll find in your distribution are open source and make it possible to change whatever you need changing. Had this not been the case we would have needed to wait for whomever maintained the drivers to supply us with a version that would identify this device, where the existing drivers could have worked perfectly fine all along.

So in this case, it was like getting a driver for the device out of thin air.

Further, even if you spent time researching it and the change didn’t work, if you’re like me you’ll be just as excited as if it did work as now you can spent time figuring out why it didn’t work. This is actually the case with me, where the change didn’t make my device work. So I’m jumping straight in and fixing it. Will update when I get it working.

So Why Love Linux? Because by having the source code to your whole system available, you have complete freedom and control.

Knowing the Moment a Port Opens

Automated Attempts

Sometimes when a server is rebooted, whether a clean soft reboot or a hard reboot after a crash, I need to perform a task on it as quickly as possible. This can be for many reasons like ensuring all services are started to making a quick change. Sometimes I just need to know the moment a certain service is started to notify everyone of this fact. The point is that every second counts.

When the servers starts up and the network is joined you can start receiving ping responses from the server. At this point all the services haven’t started up yet (on most configurations at least), so I can’t necessarily log into the server or access the specific service, yet. Attempting to do so I would get a connection refused or port closed error.

What I usually do in cases where I urgently need to log back into the server is ping the IP address and wait for the first response packet. When I receive this packet I know the server is almost finished booting up. Now I just need to wait for the remote access service to start up. For Linux boxes this is SSH and for Windows boxes it’s RDP (remote desktop protocol).

I could try to repeatedly connect to it, but this is unnecessarily manual, and when every second counts probably less than optimal. Depending on what I’m trying to do I have different methods of automating this.

If I just needed to know that a certain service is started and available again, I would put a netcat session in a loop, which would repeatedly attempt a connection. As long as the service isn’t ready (the port is closed), the netcat command will fail and exit. The loop will then wait for 1 second and try again. As soon as the port opens the connection will succeed and netcat will print a message stating the connection is established and then wait for input (meaning the loop will stop iterating). At this point I can just cancel the whole command and notify everyone that it’s up and running. The command for doing this is as follows:

while true; do nc -v 10.0.0.221 80; sleep 1; done

If I needed remote access to the server, I would use a similar command as above, but use the remote access command instead, and add a break statement to quit the loop after the command was successful. For example, for an SSH session I would use the ssh command, and for a remote desktop session the rdesktop command. A typical SSH command will look like:

while true; do ssh 10.0.0.221 && break; sleep 1; done

This will simply keep trying the ssh command until a connection has been established. As soon as a connection was successful I will receive a shell, which when exited from will break the loop and return me to my local command prompt.

Automatically Running a Command

If you had to run some command the moment you are able to do so, you could use the above SSH command with some minor modifications.

Lets say you wanted to remove the file /opt/repository.lock as soon as possible. To keep it simple we’re assuming the user you log in as has permission to do so.

The basic idea is that each time you fail to connect, SSH will return a non-zero status. As soon as you connect and run the command you will break out of the loop. In order to do so, we need a zero exit status to distinguish between a failed and successful connect.

The exit status during a successful connect, however, will depend on the command being run on the other end of the connection. If it fails for some reason, you don’t want SSH to repeatedly try and fail, effectively ending up in a loop that won’t exit by itself. So you need to ensure it’s exit status is 0, whether it fails or not. You can handle the failure manually.

This can be achieved by executing the true command after the rm command. All the true command does is to immediately exit with a zero (success) exit status. It’s the same command we use to create an infinite while loop in all these examples.

The resulting command is as follows:

while true; do \ ssh 10.0.0.221 "rm -f /opt/repository.lock ; true" && break; \ sleep 1; \ done

This will create an infinite while loop and execute the ssh and sleep commands. As soon as a SSH connection is established, it will remove the /opt/repository.lock file and run the true command, which will return a 0 status. The SSH instance will exit with success status, which will cause a break from the while loop and end the command, returning back to the command prompt. As with all the previous examples, when the connection fails the loop will pause for a second, and then try again.

Conclusion

By using these commands instead of repeatedly trying to connect yourself, there is a max of 1 second from the time the service started till when you’re connected. This can be very useful in emergency situations where every second you have some problem could cost you money or reputation.

The Linux terminal is a powerful place and I sometimes wonder if those who designed the Unix terminal knew what they were creating and how powerful it would become.

So Why Love Linux? Because the Linux terminal allows you to optimize your tasks beyond humanly capability.

The Traveling Network Manager

Overview

Networks are such a big part of our lives these days that being at a place where there isn’t some form of a computer network, it feels like something’s off or missing, or like it wasn’t done well. You notice this especially when you travel around with a device capable of joining WiFi networks, like a smartphone, tablet or laptop. And even more so when you depend on these to get internet access.

Ubuntu, and I assume most modern desktop distributions, come with a utility called NetworkManager. It’s this utility’s job to join you to networks and manage these connections. It was designed to make best attempt to configure a network for you automatically with as little user interaction as possible. Even when using the GUI components, all input fields and configuration UIs were designed to make managing your networks as painless as possible, keeping in mind the average user’s abilities. All complicated setup options were completely removed, so you can’t configure things like multiple IP addresses, or select the WiFi channel, etc.

NetworkManager is mostly used through an icon in the system tray. Clicking this icon brings up a list of all available networks. If you select a network, NetworkManager will attempt to connect to the network and configure for your device via DHCP. If it needs any more information from you (like for a WiFi pass phrase or SIM card pin code), it will prompt you. If this connection becomes available in the future it will then automatically try and connect to it. For WiFi connections it’s the user’s job to select the first connection from the menu. For ethernet networks NetworkManager will automatically connect the first time.

These automatic actions NetworkManager takes are to make things more comfortable for the end user. The more advanced user can always go and disable or fine tune these as needed. For example to disable automatically connecting to a certain network, or setting a static IP address on a connection.

Roaming Profiles

If you travel around a lot you end up with many different network “profiles”. Each location where you join a network will have it’s own setup. If all these locations have DHCP you rarely need to perform any manual configuration to join the network. You do get the odd location, though, where you need some specific configuration like a static IP address. NetworkManager makes this and roaming very easy and natural to implement, and seamlessly manages this “profile” for you.

You would do this by first joining the network. Once connected, and whether or not your were given an IP address, you would open the NetworkManager connections dialog and locate the connection for the network you just joined. From here you would edit it and set your static IP address (or some other configuration option) and save the connection.

By doing this you effectively created your roaming profile for this network. None of your other connections will be affected, so whenever you join any of your other networks, they will still be working as they did previously, and the new network will have it’s own specific configuration.

This was never really intended to be a roaming profile manager, so other options related to roaming (like proxy servers) will not be configured automatically. I’m sure with a few scripts and a bit of hacking you should be able to automate setting up these configurations depending on the network you’re joining.

Conclusion

NetworkManager is maybe not the advanced user’s favorite tool. But if you don’t need any of these advanced features I would certainly recommend it.

So Why Love Linux? Because NetworkManager does a brilliant job of making networking comfortable in a very natural way.

Tunneling with SSH

Tunneling

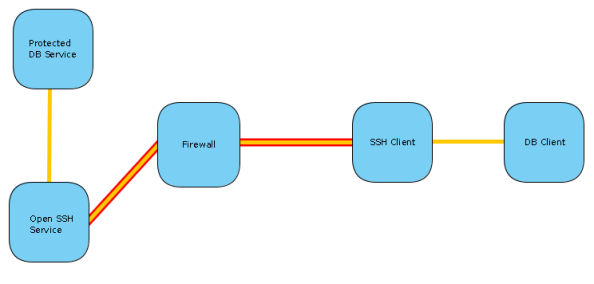

Assume you have a database service, protected from any external traffic by a firewall. In order for you to connect to this service you need to first get past the firewall, and this is where the tunnel comes in. The firewall is configured to allow SSH traffic to another host inside the network, and it’s via the SSH service that the tunnel will be created.

So you would have an SSH client connect to the SSH server and establish a tunnel. Then any traffic from the database client will be intercepted and sent via the tunnel. Once it reaches the the host behind the firewall, the traffic will be transmitted to the database server and the connection will be established. Now any data sent/received will be traveling along the tunnel and the database server/client can communicate with one another as if there is no firewall.

This tunnel is very similar to an IPSec tunnel, except not as reliable. If you need a permanent tunnel to be used to protect production service traffic, I would rather use an IPSec tunnel for these. This is because failed connections between the tunnel ends are not automatically reestablished with SSH as it is with IPSec. IPSec is also supported by most firewall and router devices.

TCP Forwarding

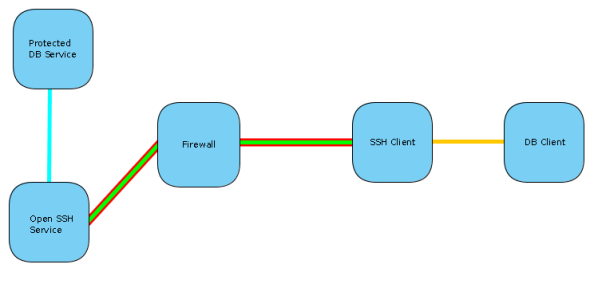

TCP Forwarding is a form of, and very similar to tunneling. Except in the tunneling described above the DB client will establish a connection as if directly trying to connect to the database server, and the traffic will be routed via the tunnel. With TCP forwarding the connection will be established to the SSH client, which is listening on a configured port. Once this connection is established the traffic will be sent to the SSH server and a preconfigured connection established to the database from this side.

So where tunneling can use multiple ports and destination configured on a single tunnel, a separate TCP forwarding tunnel needs to be established for each host/port pair. This is because the whole connection is preconfigured. You have a static port on the client side listening for traffic. Any connection established with this port will be directed to a static host/port on the server side.

TCP forwarding is the easiest to setup and most supported method in third party clients of any of the SSH tunneling methods.

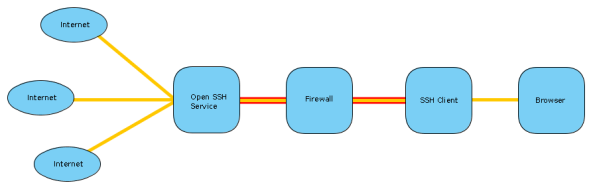

SOCKS Proxy

This is a very exciting and useful tunneling method in OpenSSH. It can be seen as dynamic TCP forwarding for any client supporting SOCKS proxies.

So what you would do is configure the SSH client to create a SOCKS proxy on a specified local port. Then your browser is configured to use this port as a SOCKS proxy. Any web sites you will be visiting will be tunneled via the SSH connection and appear to be coming from the server the SSH service is hosted on.

This allows you to get past filtering proxies. So if your network admin filters certain sites like Facebook, but you have SSH access to a network where such filtering doesn’t happen, you can connect to this unfiltered network via SSH and establish a SOCKS proxy. Using this tunnel you can freely browse the internet.

Why is this useful?

Since SSH traffic is encrypted, all of these methods can be used to protected against sniffers on the internet and in your local network. The only parts that are not encrypted would be between you and the SSH client (very often configured on your own PC) and the part between the SSH server and the destination (very often a LAN). So if you have software using a network protocol that doesn’t support encryption by itself, or which has a very weak authentication/encryption method, an encrypted tunnel can be used to protect it’s traffic.

Also, if you need to get past firewalls for certain services, and you already have SSH access to another host who is allowed past the firewall for these services, you can get the desired access by tunneling via the SSH host.

Depending on your tunnel configuration, you can hide your identify. This is especially true for SOCKS proxy and TCP forwarding tunnels (but also possible with standard tunnels). The source of the connection will always appear to be the server end establishing the tunneled connection.

Avoiding being shaped. SSH traffic is many times configured to be high priority/low latency traffic. By tunneling via SSH you basically benefit from this, because the party shaping the traffic cannot distinguish between tunneled SSH traffic and terminal SSH traffic.

There are many more use cases for this extremely powerful technology.

So Why Love Linux? Because it comes with OpenSSH installed by default.

Playing Ping Pong with ARP

ARP

Let me start of by giving a very rough explanation of how devices communicate on ethernet and WiFi networks. Each network device has a unique hardware address called a MAC address. These are assigned by manufacturers and don’t change for the life of the device. Many manufacturers even place the address on a sticker on the chip. It is possible to change the address with software, though the idea is that the address remain static so the device can communicate on a physical network.

So what happens when you want to connect to a machine on your local network? Assume your IP is IP-A and your destination is IP-B. Your computer will first do what is called an “ARP who-has” broadcast, asking everyone on the network to identify themselves if they are the owner of IP-B. The owner of IP-B will then respond to you saying, “I am IP B, and I’m at this MAC address”. After this response both machines know at which physical address each other is, and are able to send and receive data to and from each other.

Now, the protocol for discovering the MACs as I described above is called ARP, the Address Resolution Protocol. You can get a listing of the known MAC addresses of devices you’ve been communicating with on your LAN by running the following command:

arp -an

Ping

So, everyone probably knows the ping command. It’s a command that sends a packet to another machine requesting a response packet. It’s often used to test if a machine is up, whether an IP is in use, to measure latency or packet loss, and so on. It’s very simple to use. You simply run ping <ip address> and on Linux it will then continuously send ping or echo requests and display any responses. When you abort the command with Ctrl+C you will also get a summary of the session, which includes the numbers of packets sent, the packet loss percentage, the elapsed time and some other metrics.

Though because of security concerns many people disable ping and it’s not always possible to use it for a quick test to see if a host is up and behind a certain IP address. Sometimes I just need a temporary IP on a specific subnet, and ping alone isn’t enough to quickly determine if an IP is currently claimed.

This is where arping comes in. arping is a very handy utility that does basically the same as ping, except with arp who-has packets. When you run it against a given IP address, it will send arp who-has packets onto the network, and print the responses received.

Here is some example output of arping:

[quintin@printfw ~]$ sudo arping 10.0.1.99

ARPING 10.0.1.99 from 10.0.1.253 eth0

Unicast reply from 10.0.1.99 [7B:F1:A8:11:84:C9] 0.906ms

Unicast reply from 10.0.1.99 [7B:F1:A8:11:84:C9] 0.668ms

Sent 2 probes (1 broadcast(s))

Received 2 response(s)

What’s the Point

This is useful in many cases.

- Ping is not always available, as some system firewalls actively block it, even to other hosts on it’s LAN. In there cases you can still do a hosts-up test.

- You can do it to quickly discover the MAC address behind a given IP.

- If you have an IP conflict you can get the MAC addresses of all the hosts claiming the given IP address.

- It’s a quick way to see if a host is completely crashed. If it doesn’t respond to ARP it’s very dead.

- You can ping hosts even if you’re not on the same subnet.

I’m sure one can find many more uses of arping. I think it’s a very useful utility.

Windows Firewall Oddities

On a side note I thought might be interesting. I have noticed some people with the AVG Anti-Virus package’s firewall to not respond to ARP requests all the time. I haven’t investigated it further, though it seems like it will prevent sending responses in certain scenarios. This is definitely a feature that I would prefer didn’t exist, though am sure there are benefits to it, like being in complete stealth on a LAN. When I find out more about this, I’ll update this page.

Conclusion

So Why Love Linux? Because it comes preinstalled with and has available to it tons of ultra useful utilities and programs.

Flexible Firewall

I have a very strict incoming filter firewall on my laptop, ignoring any incoming traffic except for port 113 (IDENT), which it rejects with a port-closed ICMP packet. This is to avoid delays when connecting to IRC servers.

Now, there is an online test at Gibson Research Corporation called ShieldsUP!, which tests to see if your computer is stealthed on the internet. What they mean with stealth is that it doesn’t respond to any traffic originating from an external host. A computer in “stealth” is obviously a good idea since bots, discovery scans or a stumbling attacker won’t be able to determine if a device is behind the IP address owned by your computer. Even if someone were to know for sure a computer is behind this IP address, being in stealth less information can be discovered about your computer. A single closed and open port is enough for NMAP to determine some frightening things.

So, since I reject port 113 traffic I’m not completely stealthed. I wasn’t really worried about this, though. But I read an interesting thing on the ShieldsUP! page about ZoneAlarm adaptively blocking port 113 depending on whether or not your computer has an existing relationship with the IP requesting the connection. This is clever, as it would ignore traffic to port 113 from an IP, unless you have previously established a connection with the same IP.

Being me, I found this very interesting and decided to implement this in my iptables configuration. The perfect module for this is obviously ipt_recent, which allows you to record the address of a packet in a list, and then run checks against that list with other packets passing through the firewall. I was able to do this by adding a single rule to my OUTPUT chain, and then modifying my existing REJECT rule for port 113. It was really that simple.

The 2 rules can be created as follows:

-A OUTPUT ! -o lo -m recent --name "relationship" --rdest --set

-A INPUT ! -i lo -p tcp -m state --state NEW -m tcp --dport 113 -m recent --name "relationship" --rcheck --seconds 60 -j REJECT --reject-with icmp-port-unreachable

The first rule will match any packet originating from your computer intended to leave the computer and record the destination address into a list named relationship. So all traffic leaving your computer will be captured in this list. The second rule will match any traffic coming into the computer for port 113 to be checked against this relationship list, and if the source IP is in this list and has been communicated with in the last 60 seconds, the packet will be rejected with the port-closed ICMP response. If these conditions aren’t satisfied, then the action will not be performed and the rest of the chain will be evaluated (which in my case results in the packet being ignored).

Note that these 2 rules alone won’t make your PC pass this “stealth test”. For steps on setting up a stealth firewall, see the Adaptive Stealth Firewall on Linux guide.

So Why Love Linux? Because built into the kernel is netfilter, an extremely powerful, secure and flexible firewall, and iptables allows you to easily bend it to your will.