Archive

Enablement Security Policies with Sudo

Policies in Linux

You get many types of security policies that allow or prevent users in a multiuser environment to perform a certain task. You get policies that allow a specific task, and you get policies that disallow a task where it would otherwise be allowed, and policies that control the frequency or limit that amount of times a task can be performed, and so on.

Linux doesn’t have a single system or architecture for such policies. Software usually implement these themselves and provide a way to manage them if the administrator wants to enable them. Though, an enormous amount of control can be exercised with process ownership and file permissions or ACLs alone.

However, the root user (administrator user on Unix systems) on Linux has total control. When you have a root shell there is no file you can’t edit, or no process you can’t control. With ultra high security requirements this can be a problem. SELinux is a system which implements policies in a different way where you can secure your system beyond what is available by default. For more information on SELinux, see SELinux on WikiPedia.

Creating Policies with sudo

Let me explain with a hypothetical example. Assume you wanted to give a specific group of users the ability to clear a certain process’ log file.

To do this the file itself needs to be emptied and a USR1 signal sent to the process to have it reopen the file. The process runs as user and group foo, so the logged in users will not be allowed to send a signal to it. The log file is also owned by the same foo user and group, so they won’t be allowed to write to it, and thus can’t empty it either.

To give them access to this, you can create a group named logflushers which will control access through membership. Then create a script that performs the 2 necessary tasks. Assuming the script is named /usr/bin/flushfoolog, you can add the following line to the /etc/sudoers file to enable the members of the logflushers group to execute the command as the foo user:

%logflushers ALL=(foo) NOPASSWD: /tmp/notouch

With this in place any of logflushers group’s members can flush the log by running:

sudo -u foo flushfoolog

If the user is not part of the logflushers group and they try to run this, they’ll get an error like the following:

Sorry, user quintin is not allowed to execute '/usr/bin/flushfoolog' as foo on localhost.

Simplifying the Command

The command to flush the foo log seems a bit redundant. You can simplify it by adding the following to the top of the /usr/bin/flushfoolog bash script. If it’s not a bash script you just need to implement the same logic in whatever language it is.

targetuser=foo if [ $(id -u) -ne $(id -u $targetuser) ] then sudo -u $targetuser "$0" exit $? fi

What this does is check if the script is being run as the foo user. If it’s not, then it will execute the same command as the foo user, using sudo. If a user isn’t granted permission to run this command via sudo, (they’re not in the logflushers group) then they’ll get the error saying they’re not allowed to run this command as the foo user.

Conclusion

Sudo makes it very easy to control or grant access to commands to certain users. If combined with scripts access control can be made finer performing programmatic tasks or access control inside the script.

So Why Love Linux? Because it’s so simple to implement flexible access control when combining sudo and scripting.

TrueCrypt – Open Source Security

Overview

TrueCrypt is a very useful program. It allows you to encrypt your data either by encrypting your whole partition/hard drive, or by creating a file which is mounted as a virtual drive. I usually prefer the latter option, where I create a file of a certain size and then have it mounted to somewhere in my home directory. Everything private/personal would then be stored inside this directory, which results in it being encrypted. I would then be prompted at boot time for a password, which is needed to have this file decrypted and the directory become available.

I have a second smaller encrypted file which I also carry around on my pendrive, along with a TrueCrypt installation for both Windows and Linux. This second file contains some data like my private keys, certificates, passwords and other information I might need on the road.

Encrypting your Data

When you create your encrypted drive you are given the option of many crypto and hash algorithms and combinations of these. Each option has it’s own strength and speed, so with this selection you can decide on a balance between performance and security. On top of this you can also select the filesystem you wish to format the drive with, and when it comes time to formatting the drive you can improve the security of the initialization by supplying true randomness in the form of moving your mouse randomly. Some argue this isn’t true randomness or doesn’t have real value to the security, though I believe it’s certainly better than relying completely on the pseudo random generator algorithm, and most of all gives the feeling of security, which is just as important as having security. At this level of encryption the feeling of security is probably good enough, since the real security is already so high.

Passwords and Key Files

As far is password selection goes TrueCrypt encourages you to select a password of at least 20 characters and has the option of specifying one or more key files together with your password. A key file is a file you select from storage. It can be seen as increasing the length of your password with the contents of these files. For example, if you select a key file to be the executable file of your calculator program, then the contents of this file will be used together with your password to protect your data. You can also have TrueCrypt generate a key file of selected length for you. The key files can be of any size, though TrueCrypt will only use the first megabyte of data.

So when you mount the drive you not only have to supply the password, but also select all of the key files in the same order as it was configured. This can significantly improve security, especially if the key file is stored on a physical device like a security token or smart card. In this case to decrypt the volume, you need to (on top of the password) have knowledge that a token is needed, the physical token itself as well as it’s PIN.

The downside of key files are that if you loose the key file it would be very difficult to recover your data. If you select something like a file from your operating system and an update causes that file to change, then you will only be able to mount the drive if you get hold of that exact version of the file. So when using key files you need to be very careful in selecting files you won’t be likely to loose or which won’t be changed without you expecting it to change. Also, selecting key files it’s also important to not select ones which will be obvious to an attacker. For example, don’t select a key file named “keyfile.txt” which is in the same directory as the encrypted volume.

The better option is probably to have TrueCrypt generate the key file for you, and then use physical methods like a security token with a PIN to protect it. The benefit of security tokens used in this way can be visualized as having a password, but only those who have the correct token are allowed to use the password. So even if someone discovers the password they are unable to use it. And even if the token is stolen, without having the password it can not be used.

Hidden Volumes

TrueCrypt also has a function called a hidden volume, which is a form of steganography. This is where your encrypted container file, partition or hard drive contains a secret volume inside of it. So you end up having 2 passwords for your volume. If you try and mount this volume with the first (decoy) password, it would mount the outer or decoy volume. If you enter the 2nd (true) password it would mount the true or hidden volume. It’s possible to store data in both these volumes, which if done well will not give away the fact that the first volume is a decoy.

The benefit here is that if you are forced to hand over your password, you can give the password for the outer volume and thus not have anything you wish to remain private become exposed. With whole disk encryption you can even go as far as installing an operating system in both volumes, resulting in a hidden operating system altogether. So if you were to enter the hidden volume’s password you would boot into the installation of the hidden volume, and if you were to enter the outer volume’s password you would boot into the decoy operating system.

There is no way to determine whether a hidden volume exists within a particular TrueCrypt file/disk, not even when the decoy or outer volume is mounted or decoy operating system is booted. The only way to know this or mount it is to know the hidden volume’s password.

Conclusion

The primary reasons I like TrueCrypt so much is that it makes it easy for anyone to protect their data, giving you many choices in doing so and allowing you to choose the balance between security and performance. And when it gives you options for security it gives you options to have a decent amount of it (key files and hidden volumes). TrueCrypt is also very easy to install and integrates well with the environment. For certain tasks it needs administrator permissions, and on Linux many programs require you to run them as root if they need such access. TrueCrypt was implemented well enough to ask you for the administrator access when it needs to have it. It also allows mounting on startup to be easily achieved. It’s all these small things which make your life easier.

I would recommend TrueCrypt to everyone. Store all your sensitive data in a TrueCrypt drive because you never know what might happen to it. You always have the choice of using your operating system’s native data encryption functionality. Though TrueCrypt certainly has more features and makes all of them easily accessible and maintainable. It’s GUI is also easy to use, and more advanced functionality like mount options is available when/where it’s needed.

To download or find out more, see http://www.truecrypt.org/.

So Why Love Linux? Because it has had a strong influence on the open source movement, resulting in high quality open source software like TrueCrypt.

[13 Jul 2014 EDIT: with the recent events with TrueCrypt I would probably think I was making assumptions when writing this… LOL]

Within the Blue Proximity

Overview

I read about the awesome little program called Blue Proximity. It’s a Python script that repeatedly measures the signal strength from a selected Bluetooth device. It then uses this knowledge to lock your computer if you are further away from it, and unlock it or keep it unlocked when you are close to it.

It’s very simple to setup. It has a little GUI from which you select which device you want to use for this and then specify the distance value at which to lock/unlock your computer, as well as which time delay for the lock/unlock process. The distance can’t be measured in meters/feet, but instead just a generic unit. This unit is an 8bit signed scale based on the signal strength measured from the device and isn’t terribly accurate. It’s not a perfect science and a lot of factors affect the reading.

So the general idea is that you try and get your environment as normal as you would usually have it and try different values for lock/unlock distances until you get a configuration that works best for you. There are a few more advanced parameters to play with as well. Especially the very useful ring buffer size, which allows you to effectively average that value over the last few readings, instead of using the raw value each time. It’s certainly worth playing around with these values until you find what gives you the best result.

You can even go as far as specifying the commands to be executed for locking/unlocking the screen. The default is probably sufficient for most purposes, but it’s definitely available for those that want to run other commands.

Beyond just locking/unlocking there is also a proximity command feature, which will ensure that the computer doesn’t lock from inactivity as long as you’re close to it. This is very useful for times where you’re watching a movie or presentation and don’t want the screen to keep locking just because you didn’t move the mouse or type on the keyboard.

My Setup

Before I had this program I would have my computer lock after a 10 minute idle period. Then if I return it would almost be automatic for me to start typing my password. The Gnome lock screen is optimized cleverly, in that you can simply start typing your password even if the password dialog doesn’t display yet. It will recognize the first key press in a locked state as an indication of your intent to unlock the screen as well as use it for the first character of your password.

After I configured and hacked Blue Proximity to my liking the screen would lock as soon as I’m about 3 meters away from the computer, and unlock when I’m right in front of it. I configured a 10 second ring buffer to average the reading it gives over the readings for the past 10 seconds. I also made 0 or higher values (closest reading to the computer) count as double entries. Meaning when 0 values are being read it will average down to 0 twice as fast. This allows for it to be more stable when moving around, but unlock very quickly when standing right next to the machine. It all works very well.

It’s been a few days now, and still when I get to the computer and it unlocks by itself I’m amused. Sometimes I even start getting ready to enter my unlock password when the screen is automatically unlocked. Very amusing.

It’s not perfect, and sometimes the screen would lock while I’m busy using the computer and then immediately unlock again. This is to be expected from the nature of wireless technologies, though I’m sure a bit more hacking and tuning will get it at least as close to perfect as it can be.

Conclusion

It’s typical of the software world to always produce amusing and fun utilities like this one. This one is definitely one of my favorites.

So Why Love Linux? Because there are tons of free and open source programs and utilities of all kinds.

Bootable Computer Doctor

The Story

A live distribution is a Linux distribution you can run from a pen drive or CDROM drive, without having to first install it onto your hard drive. This is where the name “live” comes from, as it can be run “live without installation”.

Many times I use some Live CD to run diagnostics, recover a corrupted boot sector, reset the password for a Windows/Linux machine and many other similar tasks provided by live distributions.

You get very useful Live distributions these days. They are most often configured for either CD/DVD or USB pendrive, though some of the smarter ones can use the same image to boot from either. Beyond this, when you boot from them you will (mostly) get a syslinux bootloader screen from which you select what you want to boot.

Here are a few very useful example live distros that I use:

- SystemRescueCd

- Ultimate Boot CD

- Trinity Rescue Kit

- Puppy Linux

- DamnSmallLinux

- Pentoo Linux

So if I just wanted to reset the password for a Windows machine, I would burn a CD from the Ultimate Boot CD image and boot from it. In then has an option for booting ntpasswd, which allows editing of the Windows registry and resetting an account’s password.

In another scenario if I just wanted to reinstall Grub, my Linux bootloader, I would boot DamnSmallLinux to get a terminal from which I can reconfigure my boot loader.

The problem is that depending on what I need to do I might need a different Live distribution every time. I used to carry CDs for each of these, but I would forget a CD in the drive, and sometimes I don’t have my CDs with me, or the machine doesn’t have a CDROM drive. The ideal situation would be to have all of this on a USB pen drive which I carry with me all the time anyway.

So after investigating this, it turned out that in themselves the distros don’t like sharing their boot drive with others. Some of them didn’t even support booting from Pen drives (only CDROMS).

Open Source DIY

Being open source all these problems are just hurdles to overcome. Nothing more.

I downloaded all of them, and one by one extracted their images into a directory structure of my choice. To ensure they don’t polute the root directory of my pen drive I put them all into a syslinux subdirectory. This alone will break some of them since these want their files in the root of the filesystem. Again, just something to hack out.

After this I made my own syslinux bootloader configuration, which has a main menu with an item for each of the distros. If you select one of these items, it’s original menu will be loaded. I also modified their menus to create an item that will return back to my main menu.

On top of the structure of the menus I had to modify the actual boot loader configurations to support my new directory structure as well.

Further, to speed up the development of this I made a mirror image of the whole pen drive into a file, and then used this for developing my distro collection. For testing I would boot the image with QEMU. All this allowed many extra abilities like to back it up, boot multiple instances and easily revert to previous versions.

Once I had all of the boot menus and boot loaders configured correctly, all that was left was to actually test all the images and make sure they loaded correctly. This required making some more modifications of the distro’s own init scripts (since they either didn’t support pen drives or because I change the directory structure they were expecting)

Zeta, the conclusion

In the end I had a bootable pen drive with 6 different live distributions on it, some with both 32bit and 64bit versions available.

I named it Zeta, the sixth letter of the Greek alphabet – which has a value of 7. The intention was to add a 7th distribution. I left open some space for it and will be adding it in the near future (as soon as I’ve decided on which one to add). In the meantime there are 6 other very useful distributions.

Drop me a message if you want the image and instructions for installing it onto your own pendrive.

Here are a few screen shots of my resulting work:

So Why Love Linux? Because within hours I was able to make a “computer doctor” pendrive distribution.

Tunneling with SSH

Tunneling

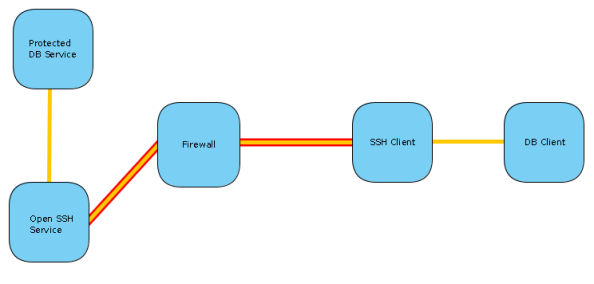

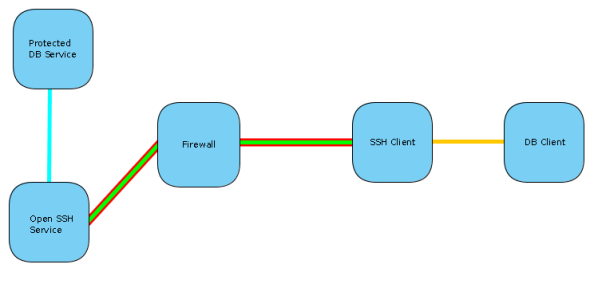

Assume you have a database service, protected from any external traffic by a firewall. In order for you to connect to this service you need to first get past the firewall, and this is where the tunnel comes in. The firewall is configured to allow SSH traffic to another host inside the network, and it’s via the SSH service that the tunnel will be created.

So you would have an SSH client connect to the SSH server and establish a tunnel. Then any traffic from the database client will be intercepted and sent via the tunnel. Once it reaches the the host behind the firewall, the traffic will be transmitted to the database server and the connection will be established. Now any data sent/received will be traveling along the tunnel and the database server/client can communicate with one another as if there is no firewall.

This tunnel is very similar to an IPSec tunnel, except not as reliable. If you need a permanent tunnel to be used to protect production service traffic, I would rather use an IPSec tunnel for these. This is because failed connections between the tunnel ends are not automatically reestablished with SSH as it is with IPSec. IPSec is also supported by most firewall and router devices.

TCP Forwarding

TCP Forwarding is a form of, and very similar to tunneling. Except in the tunneling described above the DB client will establish a connection as if directly trying to connect to the database server, and the traffic will be routed via the tunnel. With TCP forwarding the connection will be established to the SSH client, which is listening on a configured port. Once this connection is established the traffic will be sent to the SSH server and a preconfigured connection established to the database from this side.

So where tunneling can use multiple ports and destination configured on a single tunnel, a separate TCP forwarding tunnel needs to be established for each host/port pair. This is because the whole connection is preconfigured. You have a static port on the client side listening for traffic. Any connection established with this port will be directed to a static host/port on the server side.

TCP forwarding is the easiest to setup and most supported method in third party clients of any of the SSH tunneling methods.

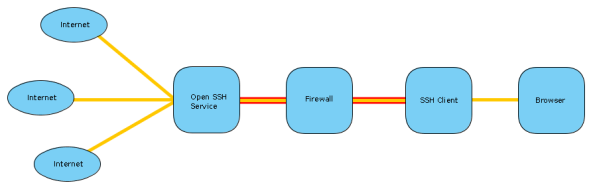

SOCKS Proxy

This is a very exciting and useful tunneling method in OpenSSH. It can be seen as dynamic TCP forwarding for any client supporting SOCKS proxies.

So what you would do is configure the SSH client to create a SOCKS proxy on a specified local port. Then your browser is configured to use this port as a SOCKS proxy. Any web sites you will be visiting will be tunneled via the SSH connection and appear to be coming from the server the SSH service is hosted on.

This allows you to get past filtering proxies. So if your network admin filters certain sites like Facebook, but you have SSH access to a network where such filtering doesn’t happen, you can connect to this unfiltered network via SSH and establish a SOCKS proxy. Using this tunnel you can freely browse the internet.

Why is this useful?

Since SSH traffic is encrypted, all of these methods can be used to protected against sniffers on the internet and in your local network. The only parts that are not encrypted would be between you and the SSH client (very often configured on your own PC) and the part between the SSH server and the destination (very often a LAN). So if you have software using a network protocol that doesn’t support encryption by itself, or which has a very weak authentication/encryption method, an encrypted tunnel can be used to protect it’s traffic.

Also, if you need to get past firewalls for certain services, and you already have SSH access to another host who is allowed past the firewall for these services, you can get the desired access by tunneling via the SSH host.

Depending on your tunnel configuration, you can hide your identify. This is especially true for SOCKS proxy and TCP forwarding tunnels (but also possible with standard tunnels). The source of the connection will always appear to be the server end establishing the tunneled connection.

Avoiding being shaped. SSH traffic is many times configured to be high priority/low latency traffic. By tunneling via SSH you basically benefit from this, because the party shaping the traffic cannot distinguish between tunneled SSH traffic and terminal SSH traffic.

There are many more use cases for this extremely powerful technology.

So Why Love Linux? Because it comes with OpenSSH installed by default.

Flexible Firewall

I have a very strict incoming filter firewall on my laptop, ignoring any incoming traffic except for port 113 (IDENT), which it rejects with a port-closed ICMP packet. This is to avoid delays when connecting to IRC servers.

Now, there is an online test at Gibson Research Corporation called ShieldsUP!, which tests to see if your computer is stealthed on the internet. What they mean with stealth is that it doesn’t respond to any traffic originating from an external host. A computer in “stealth” is obviously a good idea since bots, discovery scans or a stumbling attacker won’t be able to determine if a device is behind the IP address owned by your computer. Even if someone were to know for sure a computer is behind this IP address, being in stealth less information can be discovered about your computer. A single closed and open port is enough for NMAP to determine some frightening things.

So, since I reject port 113 traffic I’m not completely stealthed. I wasn’t really worried about this, though. But I read an interesting thing on the ShieldsUP! page about ZoneAlarm adaptively blocking port 113 depending on whether or not your computer has an existing relationship with the IP requesting the connection. This is clever, as it would ignore traffic to port 113 from an IP, unless you have previously established a connection with the same IP.

Being me, I found this very interesting and decided to implement this in my iptables configuration. The perfect module for this is obviously ipt_recent, which allows you to record the address of a packet in a list, and then run checks against that list with other packets passing through the firewall. I was able to do this by adding a single rule to my OUTPUT chain, and then modifying my existing REJECT rule for port 113. It was really that simple.

The 2 rules can be created as follows:

-A OUTPUT ! -o lo -m recent --name "relationship" --rdest --set

-A INPUT ! -i lo -p tcp -m state --state NEW -m tcp --dport 113 -m recent --name "relationship" --rcheck --seconds 60 -j REJECT --reject-with icmp-port-unreachable

The first rule will match any packet originating from your computer intended to leave the computer and record the destination address into a list named relationship. So all traffic leaving your computer will be captured in this list. The second rule will match any traffic coming into the computer for port 113 to be checked against this relationship list, and if the source IP is in this list and has been communicated with in the last 60 seconds, the packet will be rejected with the port-closed ICMP response. If these conditions aren’t satisfied, then the action will not be performed and the rest of the chain will be evaluated (which in my case results in the packet being ignored).

Note that these 2 rules alone won’t make your PC pass this “stealth test”. For steps on setting up a stealth firewall, see the Adaptive Stealth Firewall on Linux guide.

So Why Love Linux? Because built into the kernel is netfilter, an extremely powerful, secure and flexible firewall, and iptables allows you to easily bend it to your will.